Original product concept - a wearable ring

My role

This project was built from the joint effort of our four group members: Khoa Tran, Anusha Raju, Qingtong Viola Han, and myself. While we did many things together, including all the conceptual design, we also seperated a lot of tasks. In doing so I had next to nothing to do with assembling the hardware of the penguin, or designing graphical content to promote and explain our product.

My main focus was instead on development, which I was solely responsible for, except for code relating to socket communication between our micro controller and our server. A somewhat chronological history of most of the things I did during my development/design process in this project can be seen below.

- Voice Recognition - finding and implementing an Open Source library

- Creating our own grammar of accepted commands for the voice recognition

- Simple main loop for asking questions

- Voice Synthesizer - implementing an Open Source library

- Setting up a test environment with fake objects to ask questions about

- Finding a purpose - changing the purpose of the interaction

- Implementing our clue system

- Instructing users what to do

- Repeat-voice command

- Enhancing interaction - adding sounds and ability to interrupt

- Improving dialog - adding a lot of variation to the penguins answers

- Keeping track of user understanding of interaction - giving more instructions

- Allowing the penguin to take the lead and speaking when the user is quiet

- First time users - making sure they don't get stuck not understanding

- Implemented a web site for our product (not involved in the design)

- Automatically querying the penguing about objects when scanned

In being behind this much of the functionality, both in terms of design, testing, and implementation, I believe I my contribution to our project is significant, and that the group without me would have struggled hard to achieve the same results.

Back to top

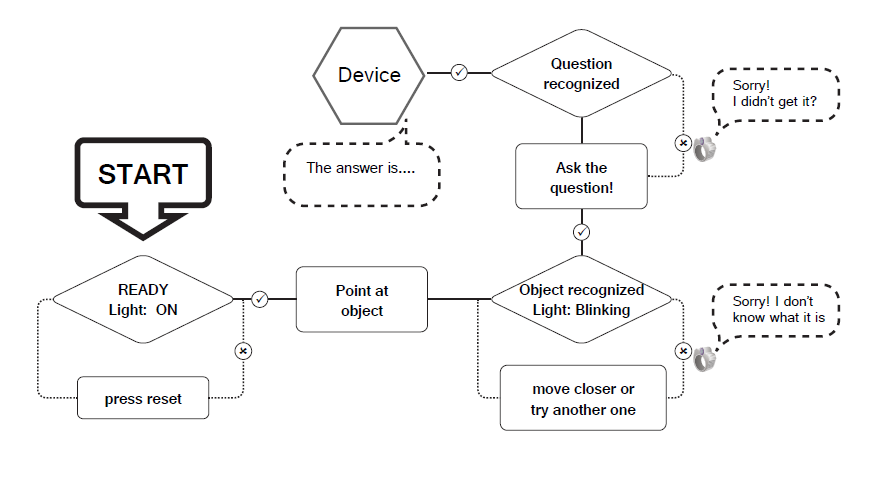

Conceptual design

Before I started coding, we did conceptual design. In our conceptual design process we came up with the idea that we would keep for the whole project, that we would build a toy for that kids would use to ask questions. We came up with three core values for our device, that it should be: simple to use, mobile, and provide a fun experience. A brief outline of the reasoning behind each criteria is provided below.

Simple interaction

Our core values - Simple, Mobile, Fun

We decided that the interaction needed to be simple in order to make it easy and intiuitive to kids to use. As we wanted kids to be able to play with the toy independently, the interaction had to be self-explanatory to the degree that a kid could figure it out on their own. Our product needed to be simple not only conceptually in terms of workflow, but also in terms of the physical manipulation and dexterity needed to use it. Young kids might not have fully developed motor skills, making the kind of micro navigation usually needed for digital devices extra hard for them to perform. While we solved the 'completely physical-simple' requirement quite easily by using an audio interface, making the interface conceptually easy to understand kept me busy during all the project. The extra crucial part was explaining to kids how to use a product that, despite being completely physical, has no real helpful affordances for explaining itself. The invisibilty of our audio interface, and the challenge it provided in order for our device to explain itself to users, was the main challenge for me in this project.

Fully mobile

The requirement of the product to be mobile is closely connected to the requirement of it being tangible and naturally easy to use. We kept this criteria separate because we felt that losing mobility would severly reduce the value of our product. As kids naturally move around, a device that is stationary will only be played for for as long as the kid has interest to stay. To enable them to run around with the toy, we decided it had to be mobile. It was also one of the stronger points we wanted to make with our product in terms of the type of interaction we would want more from future devices.

Fun to use

The final requirement we decided on was that using the device had to be a fun and pleasurable experience. At the surface it might appear that we would satisfy this criteria quite easily, given that we were designing a funny looking toy, with the unusual behaviour of being able to speak to you. However, we realised early that these novelty effects would only take us so far. Keping the kids engaged past the first interaction, imaging it in the context of a competitive environment full of other shiny and funny toys, we realised we had to put extra effort if our toy was to be an actual proposition of something that a kid would use more than once.

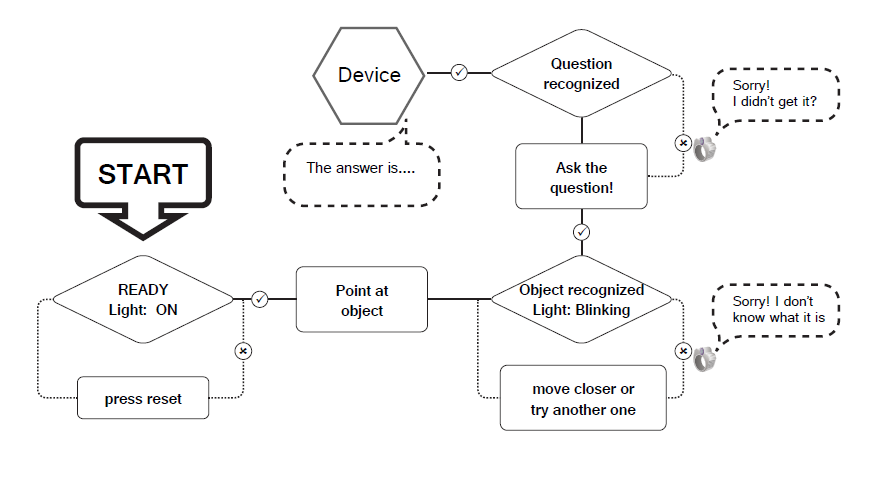

Relatively early - untested - interaction plan

Back to top

Early testing

As soon as I had the voice recognition, voice synthesizer, and my test environment up and running, I immediatly started to test out our proposed interaction. Testing early and often are principles I put at the core of good design, and something I believe we should have done much more throughout this project. Unfortunately our development of software, hardware, and other things happened quite independently, and it wasn't until late in the project that we managed to really get together and perform 'real' tests. That the hardware was almost always unstable due to being under development, and that we did not recieve our wireless headset until one of the last weeks of the project, also did not help.

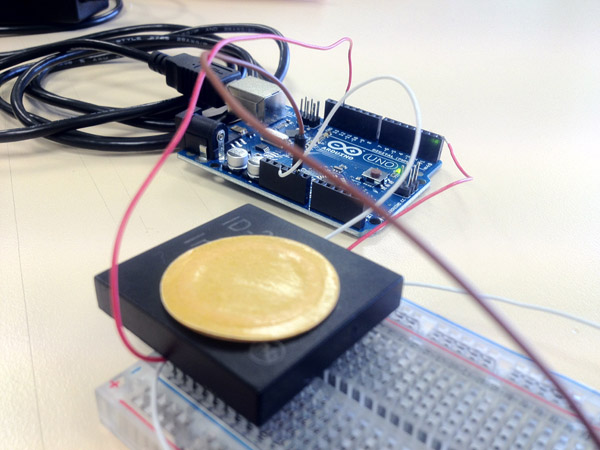

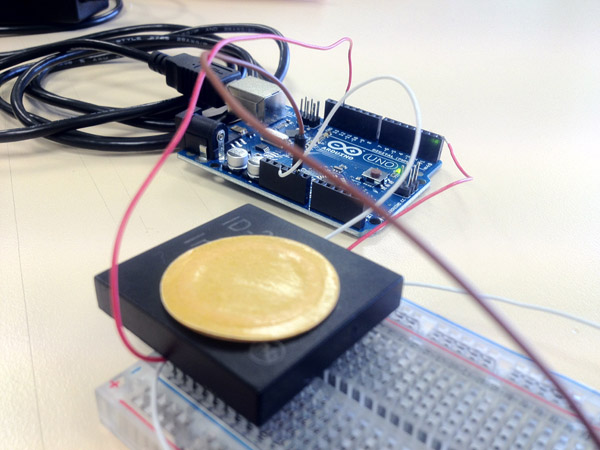

Early RFID reader hardware setup - Hard to include in general mobile interaction testing

We had created an interaction plan for our device, and I was very keen on testing it out, but found no real good way to do so. I would sit by myself in front of my Java-code, speak straight to my computer, trying to use my imagination to fill the gap between my setup and the one were our users would be moving around, carrying our mobile toy. Let's just say it was not as easy as it could have been. When I really needed to test the physical aspects of our future device, I would just take either our not yet working device, or any physical thing of the right weight and shape, and just walk around with it imagining it was doing what we were currently building it to do.

Back to top

Making the interaction meaningful

When I was sitting in front of my computer repeating "What is this, What is this" (receiving simple answers, such as "this is a shoe"), I quickly realised that the interaction we had imagined was really dull and more or less completely lacked a purpose. This was something I had been worrying about since even before our project started, but this was the time it really hit me. This was around the same time that we had jointly decided to try using a penguin as our toy container, a decision that made it to the final product. After some discussion with other group members I came up with the idea to give a purpose to the interaction - to have this penguin ask the kids for help to find things, rather than just passively waiting for them to ask questions (about things).

My suggestion was to have the penguin lead the interaction, by giving the users clues of what he was looking a thing he would be looking for, using kids natural curiosity and willingness to help a cute helpless penguin.

'What is this?' - Testing our audio interface

As part of a this suggestion, we briefly gave our penguin glasses to wear. This was part of explaining to the kid why the penguin needed help; why he could not see and locate the things himself - because his vision was really bad. We later dropped the glasses as we found that they did not help our users understand the concept.

In addition to adding this new purpose to the toy, I decided that we also needed a much more polished and rewarding interaction for our users. The crucial thing I suggested here was to start rewarding our users for playing with the penguin; for finding the things he is asking for. I started implementing some voice rewards where the penguin would just tell the kid how awesome they are for finding what he had been looking for for ages. Later in the project, I started adding in sound effects, and continously added more varied responses from the penguin, so that the interaction would not become repetitive. During the rest of the project, a lot of my time went into such polishing, pushing the interaction to become more pleasurable.

Back to top

Making speech interaction easy to discover and understand

When our penguin wore glasses

As soon as I had this new clue system going, our interaction started making much more sense. I was still sitting by myself talking to my computer, but at least the interaction had a bit more depth and seemed more meaningful then before. However, as soon as I started handing my setup over to other people to see how they reacted to it, I realised it was terrible at explaning itself and how it should be used.

The irony here is that we had deliberately decided to forgoe a digital interface in favour of something tangible - but somehow in the process of doing so we had managed to create a physical device that would have less affordances for how to be used than a digital product might have had! Of course this was because of our choice to use a transient medium - audio - for our interaction, but it did prove an immense challenge to solve. As an interaction designer, and as the one sitting in front of the code, testing the interaction, I took in sovling this problem. I would make our invisible interaction transparent to users.

Offering instructions of how to use

I started iteratively implementing and testing solutions to this issue, progressively making our interaction more and more transparent to our users. As a first step towards a solution, I made it so that the penguin would sometimes tell the users what they had to do - exactly what commands it accepted in order to give them a clue, or to answer whether the thing they had found was the thing he was looking for. The problem was when to drop this information to ensure the users got it when it was needed. As we wanted the device to be intuitive to use, we did not want to resort to having users to ask for 'help'. My first solution was instead to give this information as part of the first thing the penguin says in an interaction.

The problem with this solution was that we did not know when an interaction with one user ended, and a new one started. This was because we did not want to neither require any specific, unintuitive, start command, nor did we want to have users resetting or turning the device on and off every time they would use it, as it took sometimes almost a minute to start up.

After receiving the suggestion from one of our tutors that we could fill in silent moments in interaction by having the penguin take lead in the interaction and starting to speak by himself, I got an idea. What I realised was that we could make the reasonable guess that not recieving any (accepted) commands from the users meant that they did not know what to do. After a fair amount of refactoring of our code, I managed to have our penguin speak by himself after a certain time of silence. On these occasions, he would give a random instruction on how to perform one of the steps in the interaction (f.e. 'you can ask me for more clues', or 'when you have found something, you can ask me if it is what I am looking for').

This was still not good enough. As our interaction had at least two different important steps the user had to understand (asking for clues, asking to check if it was the right thing), there was this possibility that even after a long time of silence, the user would not have recieved both these pieces of information. This was in part because, as with all of our interaction, I made the response the penguin gave some how random, to make it less predictable, to appear less like a machine. Even if we had just iterated over the instruction steps needed for the user, it would not be a good solution, as the user would be keeping on hearing the same information over and over again, even after they had already learnt the interaction.

Tracking the users understanding of the interaction

My final effort was to start doing some simple tracking of how well the user understood the interaction. The problem I have already mentioned, that I never knew when a new user interaction started, forced me to take some shortcuts. I started tracking two variables: whether the user had succesfully asked for clues about an object, and whether he or she had successfully asked the penguin about if any found objects were what the penguin was looking for. Until the user had asked for a clue, the penguin would occasionally tell the user something about how to ask for a clue. In a similar way, after the a clue had been given, but when sufficient time had passed withouth the user asking anything new, in particular about if a found object was the right one, the penguin would start talking about what the user had to do for the penguin to tell them what they had found and if it was what he was looking for. These instructions were only given when needed, and only occasionally, to not become disturbing, but I was still not very satisfied with this solution. Even though this solution guaranteed that anyone who kept the headset on and listened to what the penguin said would within at least one minute have recieved all the information needed to be able to handle the interaction, I still thought we could do better.

Back to top

Designing around physical constraints

As the final chapter of my description of our design journey, I am going to talk about how we had to overcome physical constraints in designing our toy. For the two problems mentioned here the solutions we used did not change the problem setting - the constraints were still there - rather we came up with conceptual solutions that would shifted the problem space so that the problem would work in our favour, instead of against us.

The limited RFID range

A physical constraint that we got reminded about again and again during the project was the limited range at which our penguin could recognise and give users information about things. Throughout our testing, users would ask or perform some action with the intention for the penguin to tell them whether they had found the right thing, but with limited success. Even after I implemented instructions telling users what to ask, we found that users had unrealistic expectations of at what range the penguin could "see" the object. "Okay, he has bad vision, but surely he can see the object from here?", was what many users seemed to be thinking.

The severity of this problem greatly expanded once our hardware was finally stable enough to allow us to undertake some real, mobile, testing. As we switched from a standard plugin cable source for power to using a 9-volt battery, we noticed significant drop in range for our RFID reader. This meant that while we could before at least allow say 10cm distance between the object to be asked about and the penguin, the two entities now virtually had to touch each other. No matter how much we emphasized the terrible vision of the penguin, the implication this had on our interaction just could not be explained to users in few enough steps using our current setup.

Towards the end, I came up with a, in my mind, simple improvement over this situation. Instead of focusing on what the penguin could not do (see), and how to overcome it (move closer), we would simply give users a plausible real life combined explanation and instruction for how to let the penguin percieve the thing. We would tell the users he had to smell the thing, just like a detective dog! Even young kids have a mental model of how animals sniff things, putting their noses right next to the thing to examine. We now simply moved the RFID-reader to sit just beind the penguins nose, and I changed the dialog so that the penguin would be talking about how he had an excellent nose that he used to examine things, and I removed all talk about glasses.

The headset problem

As a final thing to address, I want to quickly go through why we decided to use a headset for users to communicate with the penguin, and how we handled the constraint of having one extra thing for users to carry around.

In our original concept we had imagine built-in speakers and a shotgun microphone, so why did we end up using a headset? The choice of the headset was mostly one of technical constraints and one of feasibility. Simply put, we did not really have neither the skill to implement, nor the budget to cover, such technology. However, the question whether we would have taken this soluton would it have been given to us is not easy to answer either.

We percieved several problems of using the headset: of having to carry one extra thing; the loss of focus on the penguin, the risk of the parts being separated, the risk of the device coming across as two seperate parts rather than as one united toy.

Kid listening intensively to what the penguin is saying

However, there are also problems associated with using speakers and placing a microphone on the penguin. In addition to the feasibility problems mentioned above, we have a social problem. Not only do people, at least adults, still find it awkward to talk to technology (a problem we percieved people caring less about when wearing a headset - as it isolates them in their experience from the surroundings). Further, even if it does not bother the user to interact with the penguin for everyone to hear, it might bother the people arround you - especially parents of the kid playing with the toy who repeatedly plays a fanfare as soon as they find what he is looking for. Finally, there is a huge risk that our device would not have worked at all as we would have wanted would we have had a built in microphone. Even if we would have got hold of a shotgun microphone, in certain settings, such as in a kindergarten, there is just so much background noise that it becomes a complete guessing game for the voice recognition to understand the user. For these reasons, a headset might actually have been the best choice.

So we decided to use a headset, but we did not want it to disconnect the users from the experience they were having with the penguin. This problem was something we had been discussing ever since we decided to use a headset. While we all agreed on a solution that would integrate the headset into the experience, in hindsight it seemed like the responsibility of making this happen slipped between the chairs. Our final solution only involed a cheeta hat with the headset somewhat hidden inside, attached simply using bluetag. The only real connection between a penguin toy and a cheeta is that they are both animals, but I never even had time to veawe some kind of connection between these two into our dialog.

In either way, it seems like this solution was good enough at least for our evalution during exhibition at the Edge. To read more about the evaluation, click here.

Back to top